Jun 3, 2025 | 7 minutes

4 steps every business must consider when building your agentic AI stack

AI is powerful, but it's most powerful when implemented with care. Darin Patterson, VP of Market Strategy at Make, discusses the four major considerations to keep in mind as you build an AI-first company.

Recently I was delighted to speak on a panel at the Hard Skill Exchange Agentic AI Summit. At the core of this session was an exploration of how to build winning go-to-market agentic AI strategies, and as you might expect from talking with other industry leaders at this pivotal time in AI development, what unfolded was profoundly thought provoking. My fellow roundtable participants and I had a chance to consider the nitty-gritty steps required for any organization to safely, sensibly, and effectively fuse their processes with agentic automation. Here are my takeaways.

Planning the right infrastructure is key

As humans and automation have evolved together, especially over the last 100 years, we've done a good job of understanding how we evaluate the performance of humans in organizations when they take action. But as AI-first firms emerge and existing organizations feel the pressure to apply AI agents to stay competitive, many companies are going to face the challenge of clear approaches to testing and performance monitoring – how do we evaluate performance in the age of agentic systems?

There is now – and there's going to be in the future – a critical need for systematic approaches and a whole structure around evaluations in AI-powered business processes. Organizations at all levels are going to need to think through specific strategies to identify how any particular AI agent is applied in an organization: How do we give AI what it needs to do the job? How do we get AI to do that job properly? And even if your AI is working right now, how do we detect drift and ensure it continues to produce the results we expect?

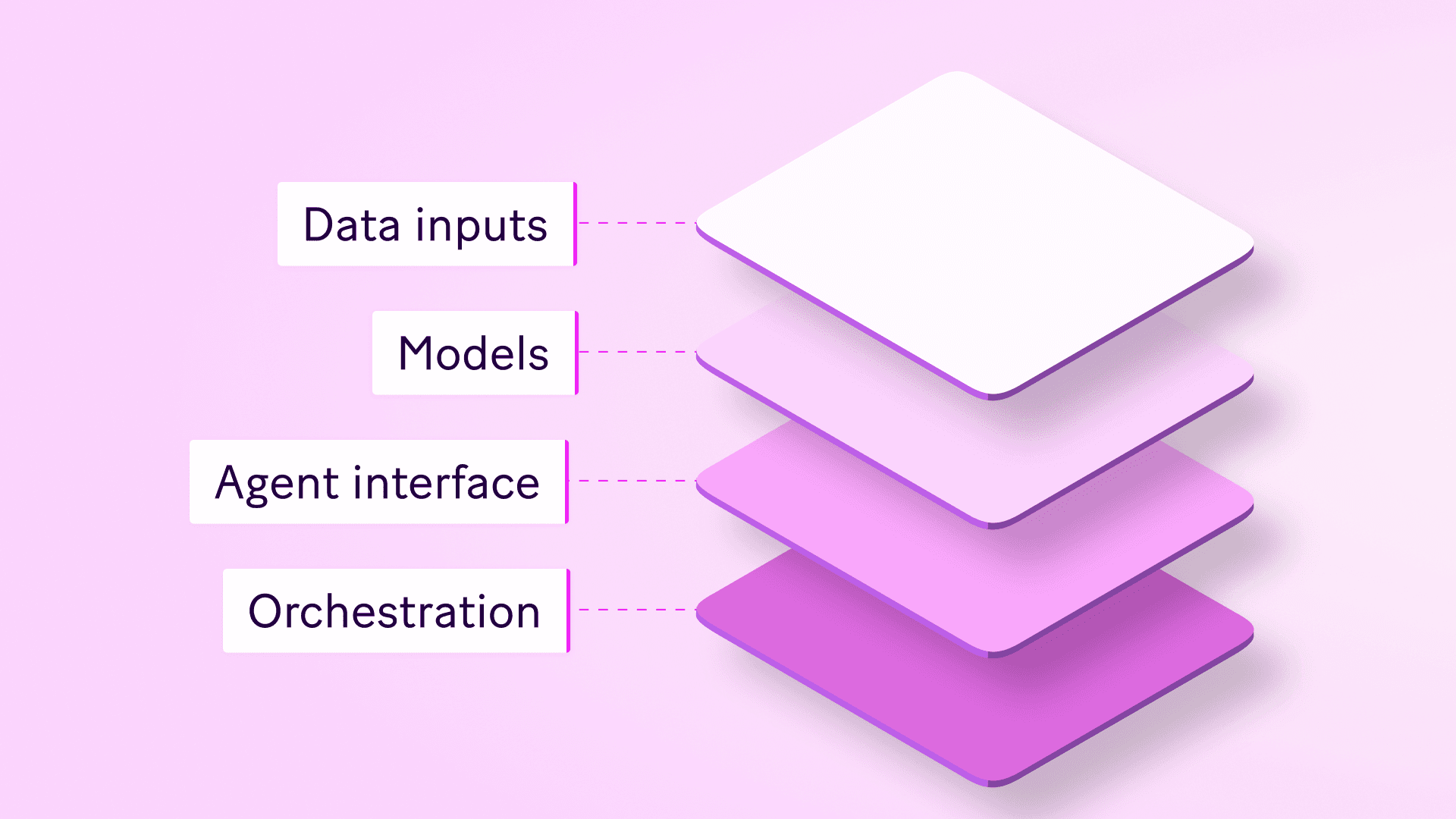

Our panel's moderator, Hyoun Park, CEO and Principal Analyst at Amalgam Insights, summed this up fairly neatly: "We’re talking about data access, putting some sort of model on top of that, having some sort of agent interface that you've customized, and then regulating the outputs. So we’ve got this kind of sandwich, or stack."

As with any tech stack, thinking carefully about how the components of your AI stack handle these issues will significantly impact your abilities, efficiency, scalability, and overall project success.

Quality data is more important than ever – but easier to achieve

As with any stable system, the data going into agentic systems is going to be what fundamentally differentiates a well-designed system from another that has unpredictable outcomes. I think we all agree that data quality matters, but it's always been a challenge.

If you're in any way in contact with salespeople, we all know those memes about trying to get them to enter data into Salesforce or whichever CRM – doing so consistently and sometimes even doing so at all has been the push (and the struggle) for years and years. And so, today, we end up with bad data, try to turn it into very fine data points, run it through deterministic processes, and get sub-optimal results.

But I think that’s one of the greatest opportunities with AI generally. Whereas in old-school data structures and highly deterministic workflows you always have to get everything in the exact same format, in perfect quality, and in the exact same structures, AI is very good at, say, capturing sales calls, taking notes from that call, identifying the relevant data points, and then using those in a much more qualitative manner.

So in some ways, I think the matter of data quality is going to be less of a challenge for organizations to nail really effectively: AI agent systems are very good at collecting and recording more nuanced kinds of data.

Ethical, governance, and access issues will be key

At the same time, however, it’s going to be really important to think through different elements of access and transparency when trying to capture the data you need. One thing I think about a lot is to what extent are agents required to self-identify themselves as an AI agent?

If we’re talking about outbound prospecting, there’s certainly going to be increased rules and regulations. The Federal Communications Commission, where I'm based in the US, is working on that as we speak – matters of whether an AI agent needs to self-identify as an agent, whether I have to opt out or opt in, what those processes look like. We don't have solid answers to that now, but it's something every organization is going to need to be on top of.

But I also think about it inside organizations. I can imagine in a couple years from now joining a new company, having a few Zoom calls with three or four colleagues, and then learning a month later that one of them is actually an AI persona. An interesting thought experiment at the moment, but that's the sort of thing organizations are going to need to bring clarity to in the short term.

Want more thoughts from Darin?

Darin's psychology background and tech experience make him a leading voice shaping the future of work. Read all of his in-depth writing about AI and agentic automation.

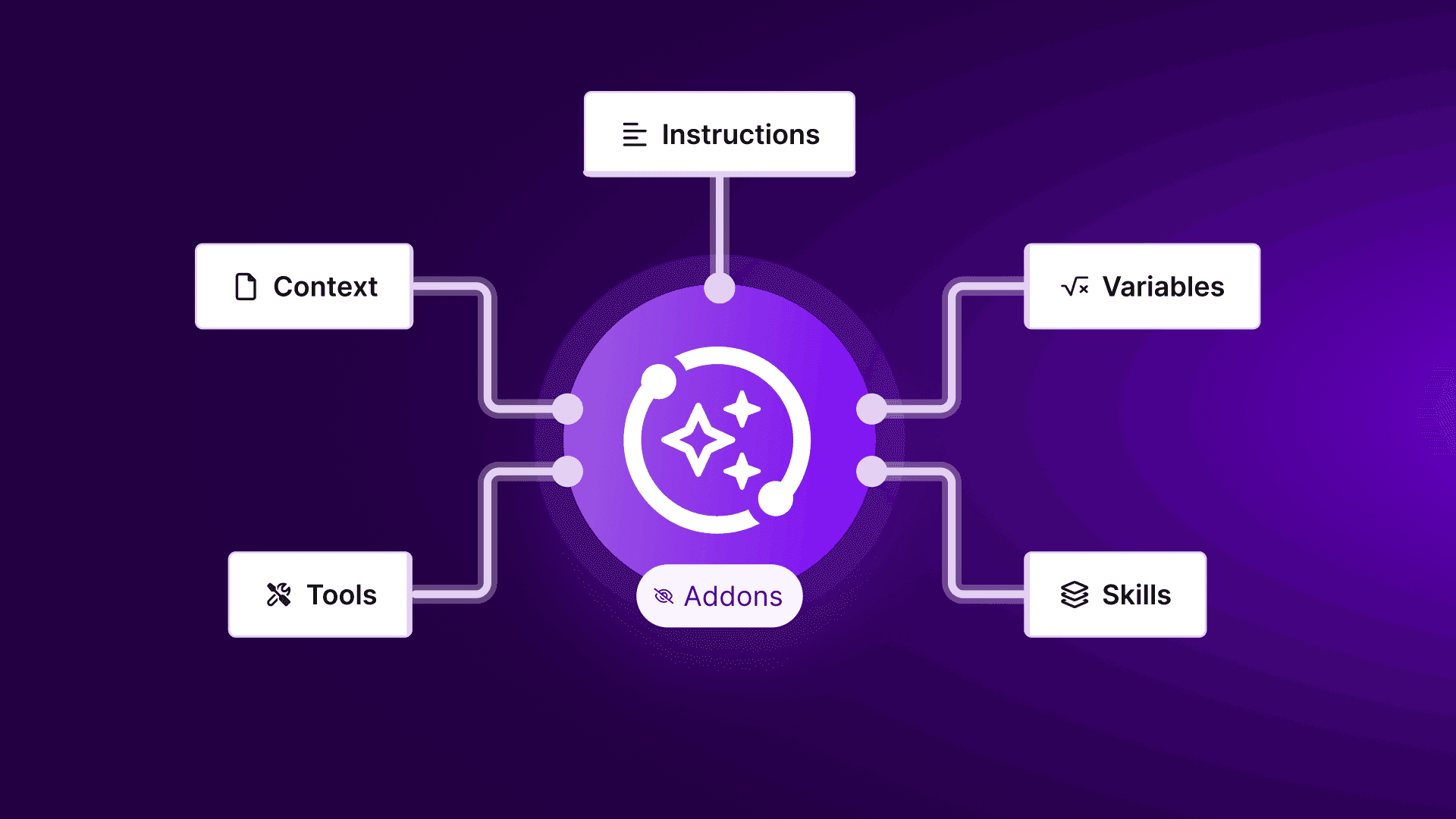

Wherever the application, significant consideration needs to go into what access AI agents should actually have to different systems and processes. As much as AI can be a game-changer, we need to make sure we don’t just give your AI agents carte blanche access to, for example, your entire CRM – there’s a lot of bad things that could ultimately happen with that, from loss of trust to legal issues to completely unintended consequences. And so having a thoughtful approach to thinking about the right tools and the right other deterministic processes or sets of things that AI agents should be able to interact with is going to be crucial.

AI models, mental models, and agent interfaces

Key to formulating such an approach is, of course, understanding your business processes. You have to get exactly how things work in order to apply automation successfully, and that goes double for applying AI.

But even once you do grasp all the ins and outs of your operations, you may not always know exactly where AI will make the biggest impact – finding out requires experimentation. And experimenting successfully requires laying some groundwork, or constructing a model framework that helps you think about the resources at your disposal and how you can put them to work.

The first foundation to lay isn't a huge challenge, but it's ongoing: you need to know the strengths and weaknesses of various AI models. No AI on the market now is a flawless jack-of-all-trades, and it'll be some time till something even close hits the market; you've got to keep up with the latest developments in the meantime.

Second, you need a business model that encourages experimentation, testing, and iterating. This is one of the secrets that hyper-lean teams surging toward $100M ARR have unlocked: every member of your team should come equipped with the drive to try new things, fail often, and add value incrementally with every iteration.

Most important of all, you've got to empower your team with the tools to put their iterative spirit into practice. You've got to become familiar with building over and over again within an interface that's both powerful and flexible – one that allows the swapping of AI models in and out to find best fits, and one that allows anyone to maximize the potential of automations whether their knowledge of coding is minimal or expert.

In other words, building your company's knowledge base, culture, and AI stack around clear principles of accessible experimentation and constant progression is the model that will allow you to thrive in the era where agentic systems are disrupting traditional ways of doing business.

Read more about how to build an AI-first company culture

Today's top-performing companies embrace a culture of experimentation that helps them get the most out of AI. Find out the secrets to their success.

Regulating outputs needs to be accessible and visual

So there's a new skill set being developed across lots of traditional roles and responsibilities. You're going to need to have the ability to understand how AI works in a business process, and very often you’re going to be asking your employees to design and manage a whole range of processes. So being able to understand the relationship between tools and agents and their inputs and their outputs – it all goes back to the idea that kicked this piece off: how to evaluate performance and, ultimately, results?

I happen to think it's most effective and most accessible to visualize as much as possible. This is one of the reasons why I was drawn to Make's way of thinking – I've spent a lot of time the last few years thinking about how to visualize automations within existing workflows and processes. Seeing how data flows from one step to another is far more efficient and effective at identifying how things work, spotting bottlenecks, and adapting with more elegant solutions – fast.

But with the rise of AI agents, I'm thinking about ways to visualize the next layers of complexity. Where is traditional automation doing the job admirably, and where is an AI powerup needed? Where is an AI agent in need of a new tool, or where does a tool's output need more tuning? And how, as task-focused agents work together, do we manage agent-to-agent interactions in order to achieve broad-spectrum agentic systems? You're going to need to ensure that all these moving parts work together as complexity increases.

Orchestration, then, will be the watchword as we embed AI deeper into our processes. And as more people with more varied levels of technical know-how are going to be required to manage AI-driven processes, you can – you almost have to – allow them to do that visually, even if the interactions happen behind the scenes when you’re not actually working with it. Visual orchestration will be critical to ensuring that your AI stack is doing the most it can across your business and giving you the outputs you need, quickly and consistently.

Building your AI-first stack

So I think Hyoun Park, our moderator, had it right: implementing AI successfully is going to hinge on conceptualizing an AI stack. To slightly reframe his summary, I'd say data, access, interfaces, and orchestration are the key components every AI stack will need to cover – the framework that every company will need to rely on as we move into an increasingly agentic world.